Written by Suzanne Mistretta and Jake Heid

Large Language Models (LLM) are specialized artificial intelligence (AI) models that can understand language and generate text-based content. As a mortgage advisory firm specializing in litigation support and analytical, underwriting, and servicing consultative services, Oakleaf is in a unique position to maximize the full potential of these powerful models. This article describes Oakleaf’s developing capabilities and lessons learned applying the ChatGPT LLM to a large database of legal contracts.

The Opportunity

To support our clients, Oakleaf often performs research regarding GA terms that requires identifying, comparing and contrasting contractual terms contained in thousands of GAs. Such research has included:

• Researching RMBS GAs to identify the parties to the GA.

• Researching RMBS GAs having terms limiting or prohibiting mortgage loan modifications.

• Researching RMBS GAs to find deals having similar waterfall language.

• Researching RMBS GAs to audit whether Optional Termination requirements were complied with.

• Researching RMBS GAs to identify parties responsible for enforcing the trusts’ putback rights.

Previously, such research has generally been performed manually by a team of analysts reading and searching through GAs.

To improve this analysis, Oakleaf recently applied GPT-4, a natural language processing model, to the task of quickly identifying specific provisions in GAs on a bulk basis. GPT-4 allows for numerous documents to be queried for specific provisions by engineering a prompt in which the desired output is produced. Oakleaf plans to expand its uses to identify patterns and trends using non-data inputs and will publish its findings in separate follow-up articles.

The Technology

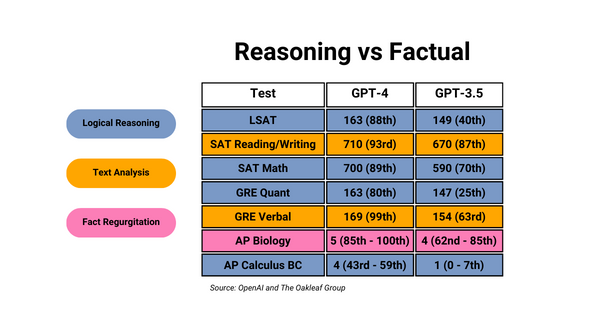

GPT-4 can process language using information to train the model to produce coherent and contextually appropriate text. Key strengths include speed, API functionality for programmable use, and its ability to do simple reasoning and recognition tasks. However, there are some key weaknesses; most notably, inconsistent output for identical input when using open-ended prompts. Further, LLMs “know” information based on their training, which can lead to hallucination (confident but incorrect answers) with unsupervised training.

GPT-4 does a great job with fact regurgitation, and text analysis but logical reasoning is more complex. For logical reasoning exercises, Oakleaf developed prompting methodology to produce high confidence in the responses and employed a variance testing technique to help develop better prompts and reduce inaccuracies. Through iterative processes of variance testing, Oakleaf identified ways to maximize GPT-4’s potential for processing content using logical reasoning.

Case Study

Oakleaf needed to review 128 RMBS GAs to determine if the net interest margin holder or bond insurer is protected at the time optional termination is exercised. A Python script went through each termination section, and was submitted to GPT-4, along with directions.

What Worked and What Didn’t:

When prompted with only a single question, GPT-4 performed similarly to guessing on every question (accuracy was just 27%. With little direction, things to consider, or mistakes to look out for, GPT-4 had inconsistent output that was often incorrect. Even more, with no interpretation from GPT-4, we cannot see where it is making errors.

Asking GPT-4 to Explain Itself:

When prompting GPT-4 to give supporting text and interpretation before its answer, output variance decreased moderately as opposed to prompting GPT-4 to give its answer first. When adding Main Question + Supporting Text + Interpretation + Answer, accuracy increased to 34%.

Detailed Prompting Greatly Increases Accuracy:

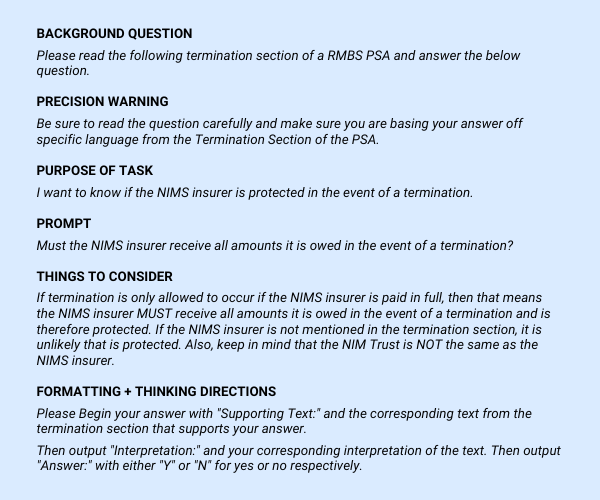

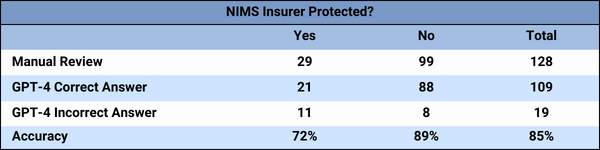

Providing GPT-4 with the instructions, warnings, and purpose as well as things to consider and thinking questions increased accuracy to 85%.

Variance Testing

Oakleaf developed a variance testing technique to assess the accuracy of GPT-4’s responses, which also helped inform the level of detail and format of the prompts that would improve the consistency and accuracy of the GPT-4’s responses. The variance testing consisted of 15 trials for each deal with the mode answer used as a final answer. The more often the same answer was given with the same input, the more likely it was correct.

Through the variance testing, Oakleaf was able to develop the detailed prompting needed to increase the accuracy of GPT-4’s responses to 85% from the initial 27% with simple prompting. Importantly, the variance testing allowed Oakleaf to identify those deals that were likely to have an incorrect response.

Of the 128 deals tested, 99 produced identical responses for all 15 trials. Of those 99 deals, 94% (or 93) were correctly answered. GPT-4 produced incorrect responses 100% of the time for six deals.

The remaining 29 deals had inconsistent responses, of which roughly half or 13 deals had incorrect answers. With the variance testing, the deals with inconsistent answers were likely to be wrong if less than 14 of the 15 answers (80%) were the same. For instance, if 8 answers were “Y” and 7 were “N” the final mode of “Y” was likely to be wrong.

While GPT-4 cannot completely replace manual work, the productivity gains and efficiencies achieved when used in conjunction with variance testing are significant. The variance testing is key to successful execution because it helps develop better prompts to increase accuracy. Also, the testing helps identify errors so that manual reviews are targeted. Lastly, and more importantly, it reduced the manual research from 128 GAs to 19 (6 consistently wrong responses + 13 inconsistent responses) as well as the time spent on manual research since searched text is used to prompt GPT-4 and responses include the sections and text.

Results

The GPT-4 based review process we describe above was able to achieve 85% accuracy, correctly categorizing 109 out of 128 deals in the sample population.

The detailed prompting plus variance testing increases the power of GPT-4 through automation of manually intensive tasks with a high degree of accuracy. The potential to analyze and produce results from non-data sources is significant and Oakleaf plans on using this technology for its clients across all business lines. The outcomes of Oakleaf’s GPT-4 projects will be shared in future articles, showcasing the potential of AI-driven solutions in revolutionizing the landscape of advisory services.

To learn more about the transformative capabilities of GPT-4 and Oakleaf’s comprehensive suite of services, please reach out to Suzanne Mistretta.

Sign Up for Newsletter Updates

Oakleaf at a Glance

See Who We Are | Meet Our Leadership Team

Join The Oakleaf Team

Join Oakleaf and put your talents and skills to work with our leading financial, banking, and mortgage client organizations.

See The Work We Do

See how we support our clients and their teams in tackling their most complex matters. Or contact us if you want to discuss anything further.